Introduction

Training for musical practice can be very laborious. For example, when practising singing, it requires attending singing classes that teach you to visualise the right note in your head before you sing it. This is called musical imagery. It is the ability to get an "image" of the note before playing/singing it. It can be perfected with practice.

This ability is very important for a musician as it allows him/her to visualise a gap between two notes he/she wants to play/sing, and to translate it on his/her instrument or by voice. [1] Imagining a piece of music gives us a tempo in our head, it’s a strategy to find it. Musicians who don’t have access to their instruments when they travel work with their score and don’t just read it and understand it, it is transcribed into music in their head.

This notion is called voluntary musical imagery or auditory imagery. The development of new electronic musical interfaces gives rise to potential novel approches in music education. The gestual interactive systems and interfaces has a great potential for practise in music pedagogy [2].

Details of the project

It is possible to imagine learning disciplines on the mirror such as playing a musical instrument. The first objective of the project was to be an example of a module allowing people to learn its practice. As the mirror only requires the person in front of it without any material, a musical module would not have been suitable for most musical instruments.

First idea

This is why the first idea for the project was to create a module to learn the theremin. The theremin was a perfect candidate as it does not require direct interaction between the device and the user’s hands. The first version of the theremin module demonstration is already functional on the mirror.

The module allows the user to play the theremin remotely simply by using the mirror.

White bars that can be switched on and off indicate the different frequencies available as well as the position of the minimum and maximum volume. The user controls the gain with the altitude of his left hand and the frequency played with the position of his right hand on the x axis. The mirror retrieves the position of the user’s hands in space using the estimated pose and displays it on the screen over the bars.

White bars that can be switched on and off indicate the different frequencies available as well as the position of the minimum and maximum volume. The user controls the gain with the altitude of his left hand and the frequency played with the position of his right hand on the x axis. The mirror retrieves the position of the user’s hands in space using the estimated pose and displays it on the screen over the bars.

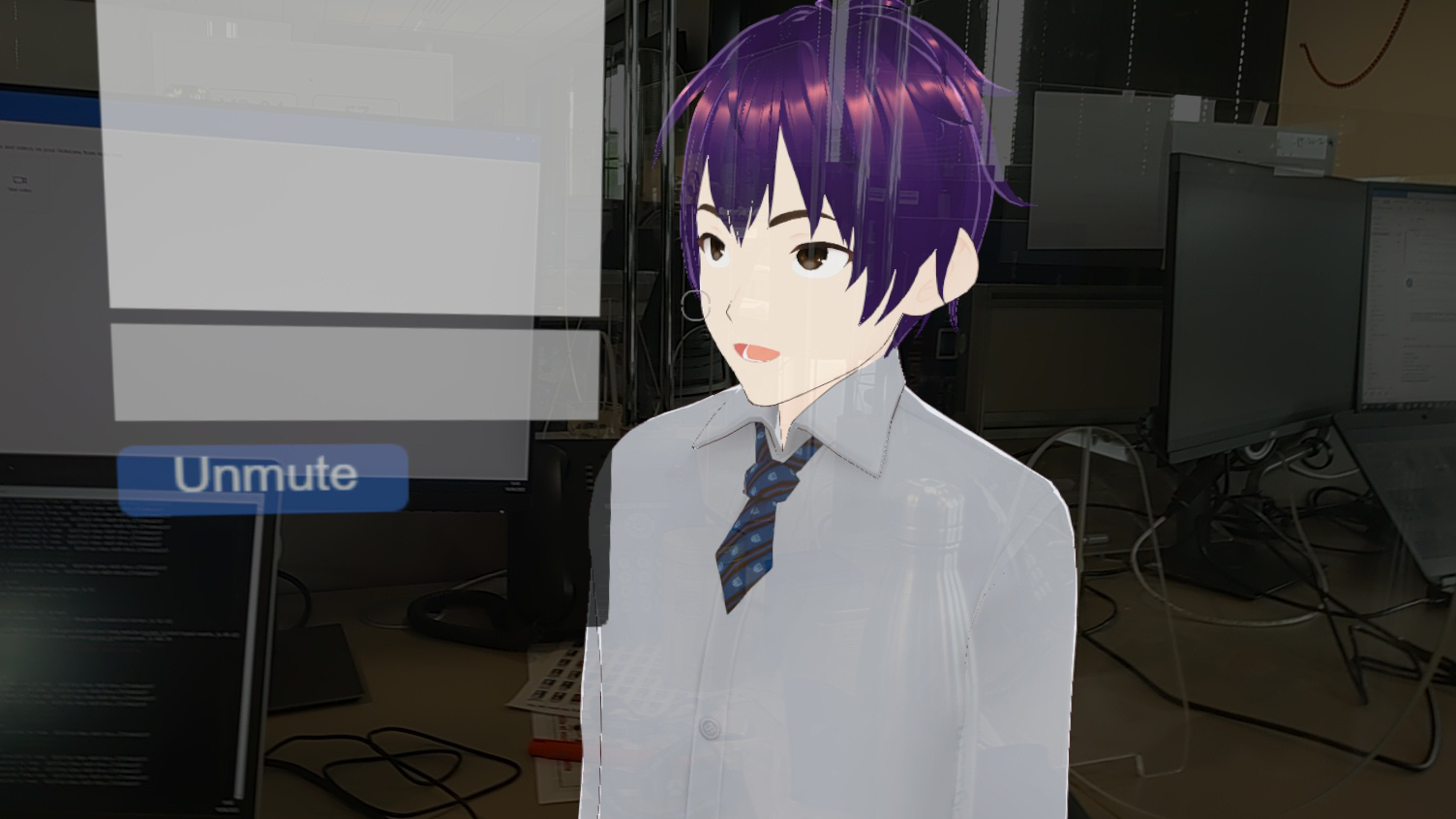

Basics of the theremine module with tutorial implemented on Art Table

A bubble follows each hand to indicate the precise position of the gain and

frequency in space. The user can generate music by simply positioning

hands in space. The module uses a synthesizer developed on top of the

operating system software used by the mirror.

frequency in space. The user can generate music by simply positioning

hands in space. The module uses a synthesizer developed on top of the

operating system software used by the mirror.

Theremine module on the Augmented Mirror

As the estimated pose requires a large number of calculations, the responsiveness of the mirror was not satisfactory and it was difficult to play sound in rhythm, as well as to "target" a frequency. The sound was based on the position of the hand on the screen, with the user’s "real" hand slightly ahead of it. The motion/sound mismatch was too unpleasant to practice with.

Second Idea

The project is now adapted to not link movement and sound. It is based on frequency estimation for practice. A user plays an instrument or sings in front of the mirror. The mirror collects the frequency emitted and displays it on the screen. The user sees the frequency he or she is emitting represented by a dot on the screen with a particle effect. The lower the frequency, the further to the left the dot is, and vice versa. Some options can be launched from the sub-menu of the "theremin" application already implemented on the mirror. The user can launch different music tutorials.

For example, he can launch the tutorial "La vie en rose", notes fall from the top of the screen and he must play them in rhythm. The module no longer has any aspect related to movement or position but only to the frequency emitted by the instrument or by the voice.

When the user sings correctly, the bars falling from the top change colour, gradually turning green and the particle effect on the dot intensifies. They become increasingly red when the user sings out of tune. The score achieved is displayed for a few seconds at the end of the song.

When the user sings correctly, the bars falling from the top change colour, gradually turning green and the particle effect on the dot intensifies. They become increasingly red when the user sings out of tune. The score achieved is displayed for a few seconds at the end of the song.

The vision of the project is to train the user to more or less consciously link the manipulation of the instrument or his voice with the sound he visualises in his head. The visual present on the mirror allows him to know where he is, if he has played/sung the right note and how to correct it if not. My approach is different because it offers different options in the display. The user can launch different music tutorials, display bars to locate notes, erase them, as well as display the position of his hands. Practising on a mirror is a plus as the user practices standing up unlike with a telephone. This makes it easier to practice singing because the body remains upright.

This project is intended to be suitable for multiple instruments, while

being entertaining and instructive.

This project is intended to be suitable for multiple instruments, while

being entertaining and instructive.

Technique and Architecture

The augmented mirror system runs on an operating system software called GOSAI. The music practice module takes the form of a JavaScript application coded directly on it. It uses the P5 library to display 2D elements and create the sketch. The backend will use two drivers coded on GOSAI for the occasion: a new driver allowing GOSAI to use the microphone of the device used and a driver allowing to make frequency estimation listening to the microphone. The latter takes a sound wave generated at the input and returns a musical frequency.

The gosai microphone driver takes the audio stream from the device hardware. It passes it to the "frequency_estimation" module which detects the frequency emitted in the audio stream as well as the gain and other parameters. This module places this data in the reddis data. The processing.py file retrieves the frequency estimation data from the data and sends it to the display.js with socket.io. The display initializes a new music workout and sends it the necessary data to run. New various tools such as the sub-menu system are coded on GOSAI for the project. The sub-menu allows GOSAI applications to propose options to be activated or deactivated manually by the user thanks to the estimation pose according to his desires.