Motion-based 3D avatars are becoming increasingly common in various projects and platforms. The advancement of artificial intelligence in pose estimation makes it possible to animate graphic models in real-time following a person's movements. Some models allow the user's position to be retrieved and their movements to be tracked through a 3D character. This tutorial teaches how to program an interactive avatar that follows movements and works in a simple web browser. The module is light, dynamic, and easily accessible.

Introduction

Due to multiple confinements, video meetings are evolving to include 3D projections of users. This paves the way for a new type of augmented reality meeting that allows remote manipulation of objects [1]. Video game developers are also using this technology to replace expensive and restrictive motion capture [2].

The pose estimation sector is a very active research field. It is driven by applications in robotics, entertainment or health, sports sciences, and others.

Pose estimation has seen real progress in recent years. TensorFlow proposed its PoseNet model in 2017, followed by MoveNet.Lighting and MoveNet.Thunder which have become references in the field [3]. Google also offers MediaPipe, its ML solution cross-platform, customizable for live and streaming media. It proposes Face Detection, Iris, hands, pose, hair segmentation, objects, and others [4].

Programs like Zoom, Google Meet, or Microsoft Teams have become part of the work routines of many people working smart at home. With the massive use of applications to do video conferencing, video lessons, or group or private video calls. There has been a significant evolution of the various tools, with updates and added features.

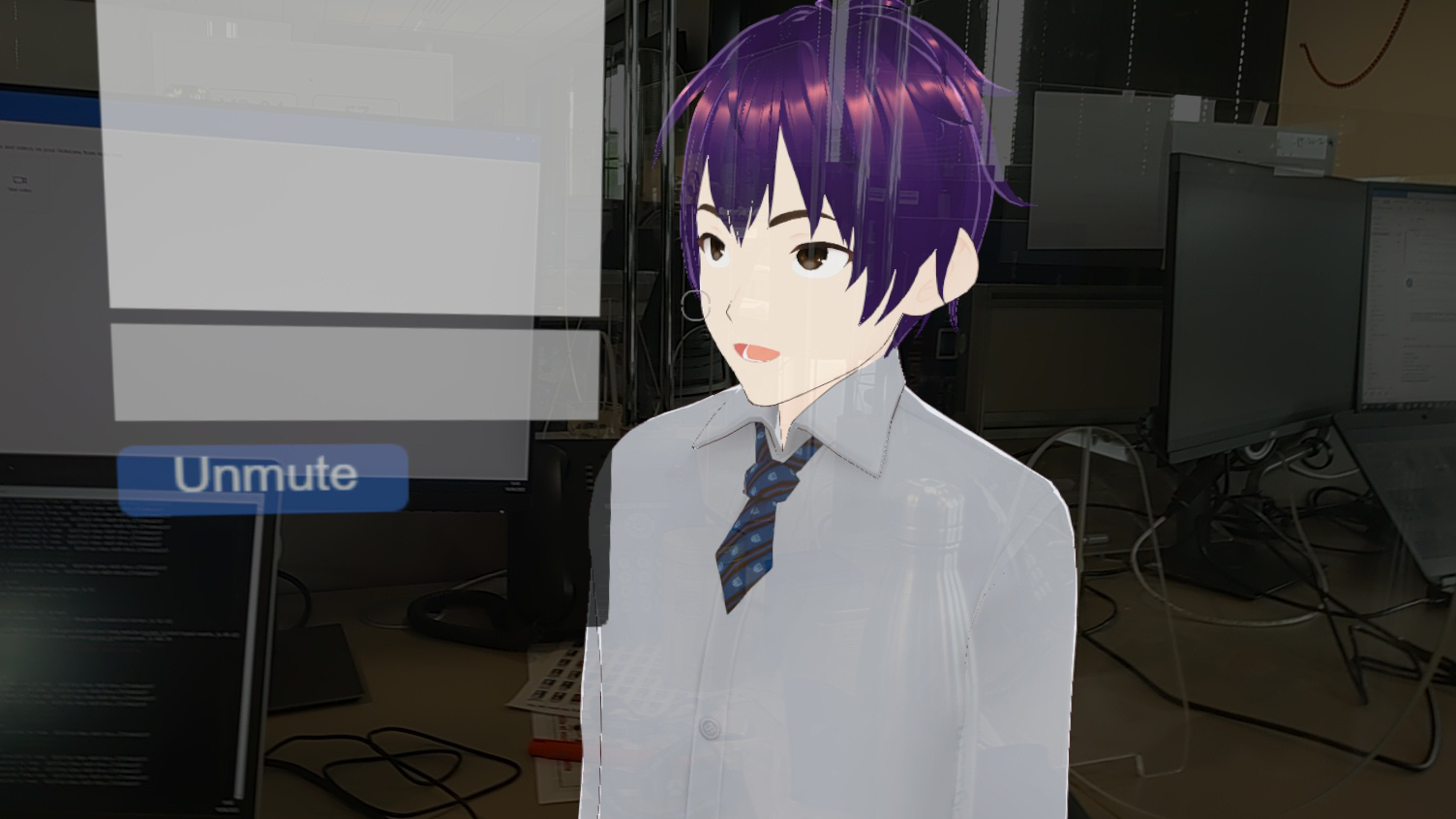

New applications and features are coming out to change its appearance in a video to an avatar that moves the same way as the user and replicates facial expressions during a discussion. The avatar can be very similar to a natural appearance, or it can be a character. This type of application makes it possible to participate in video meetings without being seen by the user.

Facebook has announced the creation of a metaverse, a virtual and persistent world. This is a fictitious virtual universe where individuals could evolve in persistent and shared spaces in three dimensions. Today, the avatar is increasingly associated with digital identity. Its use spread through massively multiplayer games (MMOs) from 1995 onwards. Today, it penetrates the social, friendly, and professional spheres thanks to the socio-digital media [5].

The objective of this tutorial is to teach the creation of an interactive 3D avatar. The program retrieves the coordinates of a user in front of his webcam and animates the model according to the person's movements.

The full code is avaible on GitHub[6].

State of the art: Browser-based interactive avatars

For creating an animated avatar, most systems implement the display of an animated model from animation software (Blender, 3DS Max, Maya, Unity, Houdini...). The animations are worked directly in the software and then imported into a program for display in a game.

Some projects allow the animation of a 3D model directly from the motion capture. In the project "Real-time Avatar Animation from a Single Image" [7].

Saragih et Al. realize the modeling of 3D models from a simple photograph. A tool such as Unity or Maya can make its animation from MediaPipe coordinates. A tool that gives excellent rendering for 2D animation is Pose Animator. A demonstration works with FaceMesh and PoseNet (from MediaPipe) online [8].

Unfortunately, this one does not understand finger movements. Adapting this system to MediaPipe by adding finger movements can give a free 2D avatar. Another way is to adapt the coordinates in real-time to animate a character on Blender using Daz Studio as Nguyen et Al [9].

Technology used

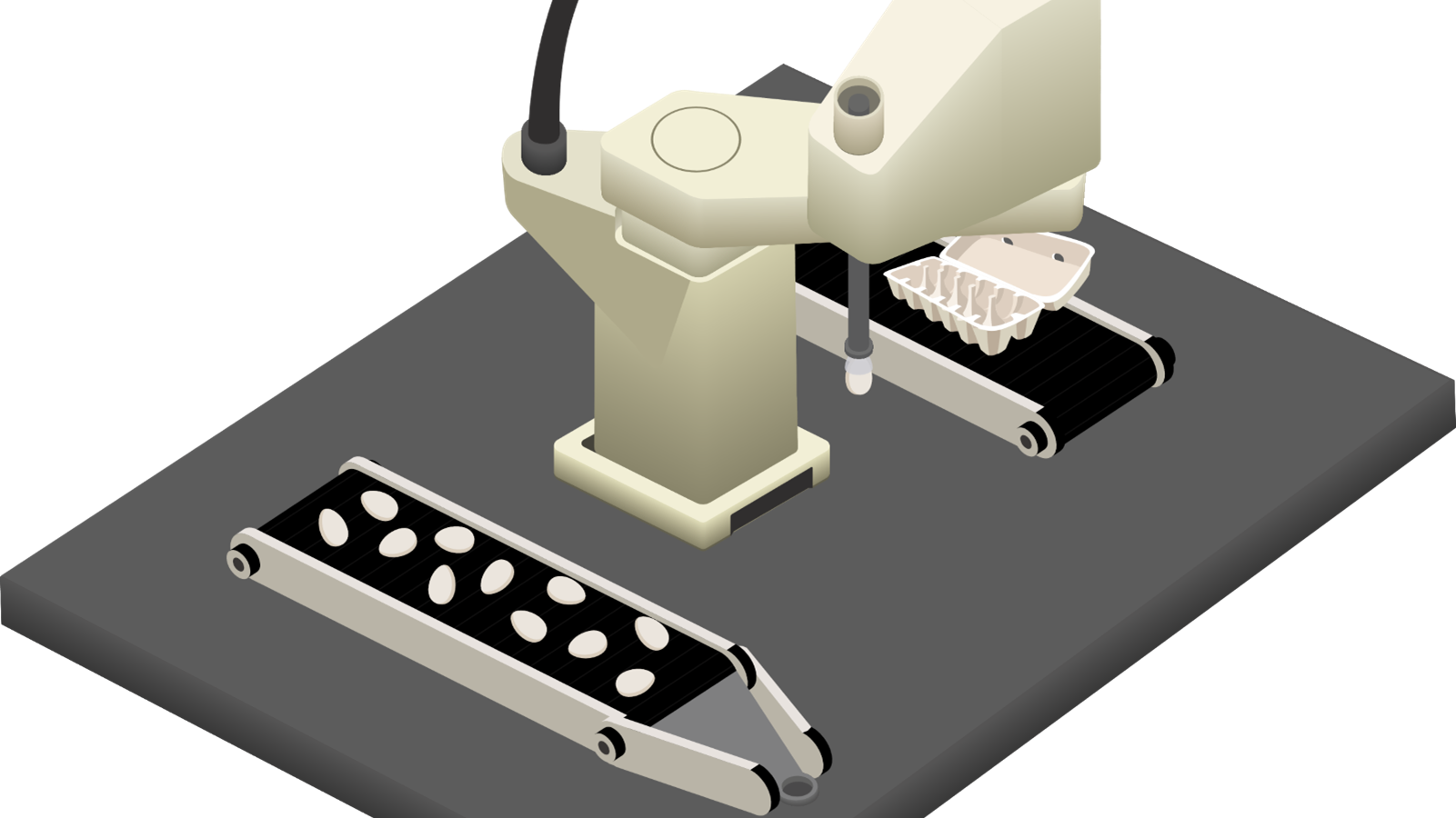

ESLA (Educative Sign Language Avatar) is entirely implemented in JavaScript. The user's coordinates are retrieved thanks to the Pose solution from Mediapipe. In this tutorial, it is coded on the "Gosai" operating system [12] to run on an augmented mirror [13].

JavaScript is a flexible programming language. It is one of the core technologies of web development, and everyone can use it on both the front and back end. It is a versatile and robust language for video games. The developer can use JavaScript to make games using a variety of platforms and tools. The developers can use both 2d and 3d libraries in combination with JavaScript to create fully-fledged games in the browser or external game engine platforms [14].

Three.js is a JavaScript library for creating 3D scenes in a web browser. The program can import it with the HTML canvas without a plugin. The library is a powerful library for creating three-dimensional models and games. With just a few lines of JavaScript, it allows the creation of simple 3D patterns to photorealistic, real-time scenes. The library can create complex 3D geometrics and animate and move objects. Three.js enables the application of textures and materials. It also provides various light sources to illuminate scenes, advanced postprocessing effects, custom shaders, load objects from 3D modeling software... It is easy to use, intuitive, and a very well-documented library.

Three.js is a JavaScript library for creating 3D scenes in a web browser. The program can import it with the HTML canvas without a plugin. The library is a powerful library for creating three-dimensional models and games. With just a few lines of JavaScript, it allows the creation of simple 3D patterns to photorealistic, real-time scenes. The library can create complex 3D geometrics and animate and move objects. Three.js enables the application of textures and materials. It also provides various light sources to illuminate scenes, advanced postprocessing effects, custom shaders, load objects from 3D modeling software... It is easy to use, intuitive, and a very well-documented library.